|

GRADUATE RESEARCH METHODS - Part [A] SELECTED MATERIALS FOR PARTICIPANTS - COURSE RUN IN 2003 |

[0] List of Content for GRM-[A]

1 SHAPING RESEARCH: CORE CONCEPTS

1.1 Conceptualizations of science

1.2 Crucial decisions in designing

a project

2 DATA COLLECTION APPROACHES

2.1 Types of data collection

methods

2.2 Behavior observation

2.3 Questionnaires & scales

(survey research)

2.4 Physical &

psychophysiological

recordings

2.5 Analysis of documents and traces

2.6 Comparative evaluation &

quality criteria

3 SAMPLING: BASIC CONSIDERATIONS

3.1 Defining samples

3.2 Response rates in surveys

4 Excursion: THINKING IN STRUCTURES

4.1 Explicating structures

4.2 Intervening/moderator/mediator

variables

4.3 Pertinent analytical tools

5 Memo: EVALUATION RESEARCH

5.1 Definition of "evaluation"

(within

the social sciences)

5.2 Main methodological issues

5.3 Substantive & methodological

reasons for empirical evaluations

5.4 A short list of literature re

evaluation research

6 PRACTICAL MANAGEMENT OF RESEARCH

6.1 Structuring tasks

6.2 Considering resources

6.3 Time planning

Given the restricted time for GRM-[A], i.e., six 90-min sessions only, a comprehensive & thorough treatise of these issues is not possible. My idea is to convey the principal concepts and thereby to induce a 'conceptual structure' for data collection methods which a student can then fill up according to her/his interests and needs.

The two 'blue lists'

(distributed

in class) provide relevant references and suggestions what to read in

each

topic.

[1-A] BR's Views on

"Research Methodology for Graduate Students"

General Objectives:

Providing the methodological knowledge which is required

> to understand the assumptions and implications of major approaches to research

> to design, conduct and interpret own empirical research of high quality

> to critically evaluate the validity and applicability of published research

Relevant Content Areas:

| > | Epistemology |

| (i.e., theory of science & research) | |

| > | Validity of principal research designs |

| (including experimental vs non-experimental, quant vs qual, cross-sectional vs longitudinal, lab vs field etc) | |

| > | Use of major means of data collection |

| (behavior observation, questionnaires & scales, physical/ psycho-physiological recordings, document analysis) | |

| > | Statistical analyses for complex data |

| (incl. expl. & confirm. multivariate analyses, structures of categorical data; analysis of verbal data, causal modeling) | |

| > | Special/novel methods/purposes |

| (e.g., utilization of computer technology in research; simulation methods, evaluation research methodology) | |

| > | Organization & management of research projects |

| (incl. budgeting of studies) | |

| > | Research ethics |

[1-B] A few notes on the "Science Game"

Why play the science game?

To identify & understand ...

- what we know now

- what we do not know yet now (but could

..)

- what we would need to do to learn about

it

- what we cannot know

::::: ABOUT "SCIENCE" :::::

Three kinds of problems:

> Veridicality

> Subjectivity

> Communication

Principal aims

> Guided by explicit rules

> Knowledge integrated into a system

(theory)

> Generalization (general laws)

> If feasable: Control thru data

Core requirements

> Aims & methods can be explained

> Science (process & outcomes) is

public

> Validity of findings can be explicated

Ultimate "quality" criteria

> Knowledge about facts: "correct"

?

> Nomological knowledge: "predictive"

?

> Technological knowledge: "effective"

?

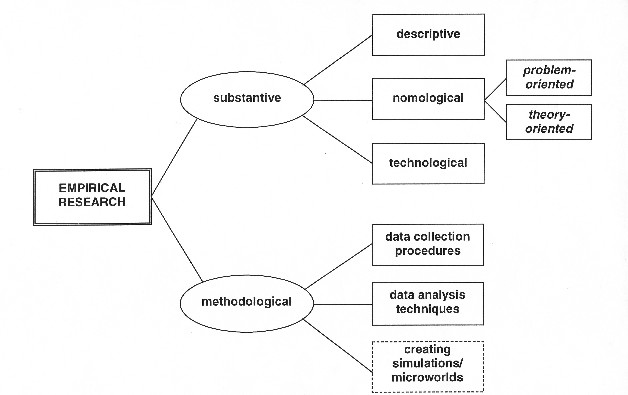

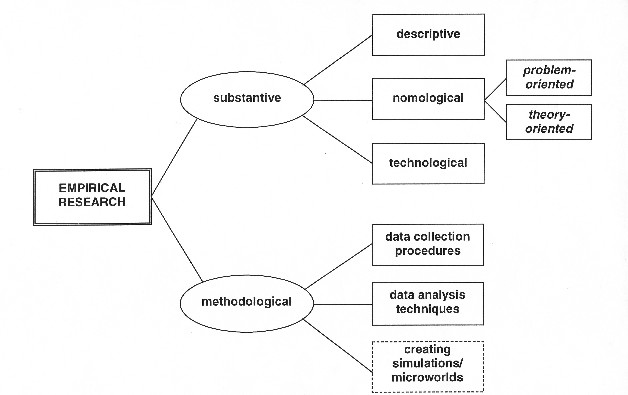

[1-C] Designing Research: Crucial Decisions

Types of investigations

theoretical

empirical

subjects

simulation

quantitative

qualitative

if empirical:

field study

lab study

experiment

non-exp. study

general investigation

case study

representative sample

specific groups

cross-sectional

longitudinal

primary data

secondary analysis

intra-disciplinary

inter-disciplinary

Types of data collection:

o Behavior observation

o Questionnaires & scales

(survey research)

o Psychophysiological

recordings

o Analysis of documents/traces

[1-E] Research Questions: Some Examples

<1> What attitudes do Australians hold towards Asian students?

<2> Is there a relationship between time of day and emotional state?

<3> How does the presence of an adult influence the interaction of playing children?

<4> Do 'prompts' enhance environment-protective behavior?

<5> Is there a higher usage of psychotropic drugs in areas of poor social or environmental quality?

<6> Do extroverted adolescents use more positive and optimistic words/expressions in their communication?

<7> Is exposure to aircraft noise impairing human sleep?

<8> Are texts printed in lower

case

or in capital letters easier to read?

[2-A] Data

collection

thru Behavior observation: Taxonomy

Observed situation: naturalistic versus manipulated

Observer's role: participating versus non-part.

Observed people: informed ("open o.") versus not informed ("disguised o.")

Observation mode: taking notes versus recording (audio/visual/a-v)

Observing ... : others' versus

own behavior

Note on "naturalness" : can

refer

to

> treatment ('IV')

> observed behavior ('DV', 'IV')

> location/'setting' of investigation

Critical for data quality:

> instructions to observer(s) and

coder(s)

> coding schemes (content categories)

for observations (in situ or recordings)

> procedures to enable control of

objectivity

& reliability

> Behavior sampling

sampling events vs. sampling times

> Aspects & units of o.'s

location/space,

objects therein,

actors,

activities/acts/events,

spoken words,

non-verbal behavior & gestures,

time

> Biases to be controlled

selective attention,

selective encoding,

selective memory,

expectancy biases (of observers

and coders),

observer drifts (in coding),

personality factors

Critical for data quality:

o instructions to observer(s) and

coder(s)

o coding schemes (content

categories)

for observations (in situ or recordings)

o procedures to enable control of

objectivity & reliability

[2-C] Data

collection

thru Questionnaires: Main types

Verbal means of data collection

> Personality tests

> Scales for judgments, attitudes, impressions, ...

> Questionnaires (designed for

particular

surveys)

Survey/interview types: main distinctions:

> personal (face-to-face) versus non-personal

> verbal/oral versus written + self-administered

> paper&pencil-based versus computer-assisted

> individual versus group interview

> single versus repeated interviewing (panel)

> standardized <-->

non-standardized

(re questions, response format, situation)

INTERVIEWER-BASED SURVEY: personal + verbal/oral + p&p data collection

MAIL SURVEY: non-personal + self-administered + p&p data collection

TELEPHONE SURVEY: non-personal + verbal/oral + p&p or c/a data collection

INTERNET/WWW-BASED SURVEY: non-personal + verbal/oral + c/a data collection

[2-D] Use of

computers

in survey research

Computer-assisted telephone interviewing = CATI

Modules which may be included in CATI

software:

> sampling (eg via RDD = random digit

dialing)

> questionnaire construction

> presenting the questionnaire on the

screen during the interview

> guiding the interviewer thru

split-questions

etc

> response recording / data input (CADI)

Computer-assisted personal interviewing = CAPI

Interviewer uses laptop PC instead of paper&pencil questionnaire

Electronic mail surveys = EMS

Questionnaire distribution and data

collection

via

> individual E-mailing

> internet list servers

> WebSites which include E-mail

facilities

[2-E] Comparison

conventional

vs computer-based techniques

| Contact | Conventional interview | Computer-assisted |

| In

person: Interviewer |

Paper-and-pencil interviewing | Computer-assisted personal interviewing (CAPI) |

| In

person: Facilitator |

Self-administered

questionnaire Audio self-administered questionnaire |

Computer-assisted

self-administered interview-ing/q.'s

(CASI, CASAI, CASQ)

Audio computer-assisted

self-administered

interviewing (ACASI) |

| Telephone | Unaided interviewing | Computer-assisted

telephone

interviewing (CATI)

Touchtone data entry (TDE) Voice recognition entry (VRE)

|

| (Snail) Mail | Self-administered questionnaire | Disk by mail (DBM) |

| Internet | (Self-administered questionnaire) | E-mail survey (EMS)

Web surveys with prepared data

entry (PDE) |

Impacts of computer-assisted interviewing

o considerable logistic and accuracy gains

o but may slow down the interviewing process

o data processing much faster

o reduces variability across interviewers

o alienating for people unfamiliar with 'IT culture'

o requires expensive technology

Criteria for computer-assisted data collection systems

<after Tourangeau et al 2000>

> Functionality

i.e., the system should meet the

requirements

for carrying out the tasks

> Consistency

the system's conventions and mappings

between actions and consequences should be the same within a

questionnaire

and a project

> Informative feedback

the system should provide some feedback,

such as a confirmation message or movement to the next screen, for

every

user action

> Transparency

the system should carry out certain

functions

(e.g., checking responses) and keep the user informed about the process

> Explicitness

the system should make it obvious what

actions are possible and how they are to be performed

> Comprehensibility

the system should avoid jargon,

abbreviations,

arbitrary conventions

> Tolerance

the system should allow for errors,

incorporating

facilities to prevent, detect, and correct errors

> Efficiency

the system should minimize user effort

by, for example, simplifying the actions needed to carry out common

operations

> Supportiveness

the system should recognize the cognitive

limits of the users and make it unnecessary for them to memorize large

numbers of commands, providing ready access to online help instead

> Optimal complexity

the system should avoid both

over-simplification

and extreme complexity

[2-F] Responding to

questions:

four main components

| Component | Specific Processes |

| Comprehension | Attend to

questions

and instructions Represent logical form of question Identify question focus (information sought) Link key terms to relevant concepts |

| Retrieval | Generate

retrieval

strategy and cues Retrieve specific, generic memories Fill in missing details |

| Judgment | Assess

completeness

& relev. of memories Draw inferences based on accessibility Integrate material retrieved Make estimate based on partial retrieval |

| Response | Map

judgment onto

response category Edit response |

NOTE:

> Models of

the

responding process may distinguish between "high-road" and "low-road"

behavior

("two-track" theories).

> Important:

cognitive aspects of survey methodology ("CASM movement")

[2-G] Questionnaire development: Main steps

<1> Theoretical concepts

<2> Variable list

<3> Enquiries (literature)

<4> Developing questions, response formats, materials

<5> Pretest I (specific test interviews)

<6> Questionnaire structure (macro-/micro-design)

<7> Instructions etc

<8> Layout

<9> Preparations for coding & data processing

<10> Pretest II (test sample)

<11> Revision

<12> Printing/sorting/stapling etc

[2-H] Developing

questions

Example: Measuring the pro-environmental orientation of residents

Construct

environmentalism

Research variable(s)

1 political activism re env.

2 affect re env. state

3 env.-protecting behavior

Operationalization

1 membership in 'green' org.

2 env. concern (attitude)

3 participation in recycling

Questions

3a "do you take your newspapers to

a paper recycling place or not?"

3b "do you separate glass in your

garbage

disposal or not?"

3c "do you buy food in non-recyclable

containers or avoid this?"

etc (6 pertinent items)

Response scaling

frequency scale 1..5 = never....always

Data analyses

Item analysis re internal consistency

Final variable:

Index of recycling behavior

Assessing questions:

> comprehensible?

> neutral?

> easy to code?

if pretest data available:

> adequate response distribution?

> intercorrelation pattern?

[2-I] Rating scales

as

response format: Main issues

Intended measurement level?

> ordinal or interval

Category labels & anchoring?

> words

> numbers

> graphic symbols

* combinations

Number of levels/categories?

> 3, 4, 5, 6, 7, 9, 10, 11, 20, 100

Middle category?

> e.g., on "disagree…agree" Likert scale

Separate "don't know" response

category?

=> SPECIAL TOPIC #1 Category versus

magnitude

scaling

=> SPECIAL TOPIC #2 Psychometric data

for verbal scale point labels

[2-J] Interviewers in survey research

Functions of interviewers

> creating interview 'atmosphere'

> administering questions

> explaining instruments

> recording responses

depending on the interview type:

* providing feedback

depending on the sampling method:

* finding a/o selecting respondents

Interviewers as source of biases

=> see section on biases below

Training of interviewers

(A) Instructional sessions re:

> Instrument (questionnaire+scales)

> Interviewing behavior <pers. int.

or tel. int.>

> Contacting respondents

(B) Practice interviews:

> Test interview in lab (with

videorecording)

> Field interview (target from real

sample)

[2-K] Sources of biases in survey interviewing

Researcher

o invalid operationalizations

o incomprehensible questions

o biased/non-neutral wording

o context effects within questionnaire

* improper sampling rationale

Interviewer

o inappropriate social behavior

o influencing respondent

o faulty conduct of interv.: wording,

seq. etc

o cheating: ...

o defective note-taking & coding

* neglect of sampling instructions

Respondent

o lack of comprehension

o response tendencies => cf list

below

o no response

o lying

* interview refusal

* unavailability

Situation

o location/place

o other people present

o time pressure

Response sets:

acquiescence

response deviation

central tendency

social desirability

leniency

stereotypical patterns

[2-L] Criteria for

assessing

survey approaches

| QUALITY CRITERION: |

|

||||

| Pers. Interview | Tel. Interview | Mail Survey | Group

session |

InterNet-

based |

|

| Participation rate |

|

|

|

|

|

| Respondent is specified |

|

|

|

|

|

| Data quality |

|

|

|

|

|

| Advanced scaling feasible |

|

|

|

|

|

| Complex topics |

|

|

|

|

|

| Sensitive issues |

|

|

|

|

|

| Multimethod questionnaires feasible |

|

|

|

|

|

| Costs per case |

|

|

|

|

|

| Time consumption |

|

|

|

|

|

Note: Assessments by Bernd Rohrmann (1998)

[2-M ] Data

collection

thru Analysis of Documents & Traces

Types of data sources

> personal doc. (letters, diaries,...)

> archives: gov./authorities, firms,

clubs,

...

> official statistics (demographical

material)

> purchase records & customer counts

> activity/usage traces (incl.

erosion/wear)

> accretion of objects (eg garbage,

hobby

items)

> material culture (eg room decoration,

...)

> books, journals, newspapers, ...

> photographs, videos, films

Some special techniques

* lost-letters

* behavior mapping via hodometers or radar

rec.

* graffiti analysis

Features and Pro's & Con's

o unobtrusive / non-reactive

o specific validity & uniqueness

o objective, less prone to cogn. biases

o complex coding & content analysis

o individual vs collective data

o longitudinal options

o privacy/confidentiality issues

o cause/effect reasoning restricted

Data analysis

-> Classification & Content

analysis

-> Software: e.g., QualPro; Nudist;

Ethnograph

!

[2-N] Data

collection

thru Physical/psychophysiological recordings

Options include:

o physical body

characteristics(e.g.,

height, weight, shape, symmetry, ...)

o motor response (e.g.: pressing

a button, tapping, nodding, eye blinking, ...)

o reaction time

<RT>

{Bessel 1822}

o skin conductane response

<SCR>

<SRL, GSR, EDA>

{Fere 1888, Tarchanoff 1890)

o electrocardiogramm <ECG>

o heart rate, pulse volume,

inter-beat

rate <IBR>

o blood pressure

(systolic/diastolic)

<BP>

o electroencephalogram <EEG>

{Berger 1929}

o event-related potential <ERP>

o magnetic resonance imaging

<MRI,

fMRI>

o tomography <e.g., PET, CAT>

o electromyogram <EMG>

o tremor, micro-vibration

<MV>

o single-cell recording

o body temperature (skin, core body)

o respiration rate <RR> &

depth

o pupilometrics <PR>

o eye movement (e.g.,

electro-oculography)

<EOG>

o bio-chemical indicators (e.g.,

magnesium, cortisol, ..)

<analysis of blood, urine, saliva etc>

o genetic data (e.g., DNA analysis,

candidate genes)

Psychological constructs aimed at:

e.g.

> perception/cognition of ...

> attention

> sensory thresholds &

discrimination

> awakeness vs. sleep, activity level

> arousal, stress, ...

> emotional/affective state

> performance in ...

> …

Main advantages of psychophys. measures:

+ highly developed

+ objective

+ not language-dependent

+ far less prone to cognitive biases

Problems with psychophysiological variables:

- validity <relation

psychol./physiol.

variables?>

- obtrusiveness / reactivity

- costs (money, time, staff)

- less feasible in field research

Non-Lab measurement techniques

=> portable devices

=> telemetric data processing

Special issue:

>>> USING ANIMALS FOR DATA COLLECTION ?!?

[2-O] General

features

of data collection methods

Criteria for comparative assessment

of data collection approaches Q O P

D

{to be summarized by student after discussion

in class}

> validity (re constructs)

> objectivity, reliability <cf biases>

> obtrusiveness, reactivity, "ethicality"

> feasibility/practicality (re procedures, instruments, settings, respondents, timing)

> costs (money, time, personnel, equipment>

> researcher's competence

General threats to validity

> confounded designs

> non-representative/distorted/irrelevant samples

> invalid instruments

> biased data gathering

e.g.

o "reactivity effect"

o "experimenter bias" (ROSENTHAL)

o "demand characteristics" (ORNE)

o "subject bias" (eg eval. apprehension,

ROSENBERG)

o "hawthorne" effects

Four types of validity criteria for research findings

> internal validity (re causal

inferences)

> external validity (re generalization)

> interpretability

> applicability

=> Researcher needs to explicate range/scope of validity

[3-A] Sampling (Mini-Lecture)

Main issues:

> target population

> sampling units

> selection mode

> sample size

> participation & response rates

> relevance of sample quality

Target population = ??

> universe vs. "sampling frame"

> full survey / sample / case study

Sampling units:

> people

> locations/places

> points in time

> events, behaviors, circumstances, ...

> documents

Selection mode = sampling rationale

Random

> randomized across elements

> stratified, multi-stage random

Factor-oriented

=> quota-sampling, dimensional

sampling

(factor combinations)

Related topics:

> the principle of "probability"

sampling

> means: ballot box, lottery, lists, ...

> (dis-)proportionate stratified

sampling

> area-sampling

> cluster sampling

> ad-hoc, convenience, haphazard

sampling

> purposive sampling

> snowball sampling

Sample size: considerations

> heterogenity of population

> need for sub-group analyses

> size of estimation error (cf. ->power analysis)

> resource economy

> availability

Participation/response rates:

i.e., percentage of people in target sample or contactable sample actually interviewed

> causes of sample losses (re targeted or contacted people)

> indices

> implications

> techniques to reduce sample losses

Relevance of sample quality:

> representativity

> generalizability - external validity

[4-A] "Thinking in structures": A few bits & pieces

Note:

Material on this session is not yet

available on my WebSite, except for the following topics:

Three types of models which link concepts/acts/variables

> component model

> process model (e.g., flow-chart)

> causal model

Some meanings of arrows used in graphs depicting models

A -->B may indicate:

A causes B (e.g., intelligence

enhances

depression)

B is a component of A (e.g., apples

belong to the category fruit)

A is followed by B (e.g., breakfast

then biking to university)

A <--> B may indicate:

A and B are conceptually linked

A and B are statistically correlated

A and B are subjected to mutual causality

(<- / ->

)

Suggestions for graphs of causal models

When designing graphic representations

of models which are meant to explicate relationships between variables,

my conventions are mainly these:

X --> Y X influences Y (i.e., direct causality)

X -- Y X and Y correlate (no proposition about causality)

X <--> Y mutual causality between X and Y (or indicated by 2 arrows, <- ->)

M => [X -->

Y]

A moderator M is influencing the relationship between X and Y

In a larger model which contains a whole set of variable relationships,

A --> B

refers

to a relationship to be investigated and

C ---> D to a

relationship which is assumed but not studied in the current research.

Some statistical techniques to analyze variable structures

> Multi-dimensional scaling (metric or non-metric MDS)

> Cluster analysis (including hierarchical CA)

> Factor analysis (orthogonal or oblique FA)

> Structural modelling (e.g., LiSRel, EQS, AMOS)

> Quantitative network analysis (of group structures & social networks)

Evaluation research is an important methodogy and relevant to all professional courses. However, there is not enough time available in the current GRM set-up to deal with this topic properly (beside the 'mini-summary' given in my final GRM lecture). Below I list a few core points and provide a special reading list for those who want to study evaluation research on their own.

[5-A] Definition of "evaluation" (within the social sciences):

>>> "The scientific

assessment of

the content, process and effects (consequences, outcomes, impacts) of

an

intervention (measure, strategy, program) and their assessment

according

to defined criteria (goals, objectives, values)"

[5-B] Main methodological issues:

Three principal perspectives:

> content-orientation (i.e.,

input/message

evaluation)

> process-orientation (i.e., formative

evaluation)

> outcome-orientation (i.e.,

summative/impact

evaluation)

Study design:

> longitudinal before/after study

<2+

points in time>

> control group (not exposed to the

intervention)

> control of measurement biases (e.g.,

expectancy bias)

Reference for comparisons:

> normative program goals (as stated by

institution)

> previous situation

> alternative interventions/strategies

Evaluator

> internal ('in-house')

> external (independent researcher)

[5-C] Substantive & methodological reasons for empirical evaluations:

> It's a matter of responsibility to check whether aims of interventions are achieved, i.e., strategies are successful and results sufficient.

> Evaluation results can demonstrate not only whether but also why a program works (or not) and thus guide the improvement of programs.

> Intuitive assessments of the program's effectiveness can easily fail because of wrong cause-effect attributions (spurious causality).

> Evaluation provides an empirical basis for a decision between alternate intervention programs/strategies.

> As campaigns are laborious and

usually

rather expensive (in terms of costs, personnel and time), evaluation

can

help to justify the efforts.

[5-D] A short list of literature re evaluation research

Textbooks and generic articles on

the

methodology of program evaluation include:

Boruch, R. F., Reichardt, C. S., &

Miller, L. J. (1998). Randomized experiments for planning and

evaluation:

A practical guide. Evaluation and Program Planning, 21, 124-126.

Chelimsky, E., & Shadish, W. (Eds.).

(1997). Evaluation for the 21st Century. Thousand Oaks: Sage.

Cook, T. D., & Reichardt, C. S.

(1992).

Qualitative and quantitative methods in evaluation research. Beverly

Hills,

CA: Sage.

Cooksy, L. J., Gill, P., & Kelly,

P. A. (2001). The program logic model as an integrative framework for a

multimethod evaluation. Evaluation and Program Planning, 24, 119-128.

Fink, A. (1995). Evaluation fundamentals,

guiding health programs, research,and policy. London: Sage.

Kemmis, S. (1994). A guide to evaluation

design. Evaluation News and Comment, 3, 2-11.

Patton, M. Q. (1997). Utilization

evaluation:

The new century text. Thousand Oaks: Sage.

Posavac, E., & Carey, R. (1997).

Program

evaluation: Methods and case studies. Hempstead: Prentice Hall.

Rossi, P. H., & Freeman, H. E. (1993).

Evaluation: A systematic approach. Beverly Hills: Sage.

Sechrest, L., & Figueredo, A. J.

(1993).

Program evaluations. Annual Review of Psychology, 44, 645-674.

For research designs in applied

settings

consult:

Cook, T. D., & Campbell, D. T. (1979).

Quasi-experimentation: Design and analysis issues for field settings.

Chicago:

Rand McNally.

Monette, D., Sullivan, T., & DeJong,

C. (1994). Applied social research. Fort Worth: Harcourt Brace.

Neuman, W. L. (1997). Social research

methods: Qualitative and quantitative approaches. New York: Allyn &

Bacon.

Robson, C. (1994). Real world research:

a resource for social scientists and practitioner-researchers. Oxford:

Blackwell.

Singleton, R. A., & Straits, B. C.

(1999). Approaches to social research. Oxford: Oxford University Press.

Sommer, B., & Sommer, R. (1991). A

practical guide to behavioural research: tasks and techniques. Oxford:

University Press.

The validity of human responses and

judgmental biases are discussed in:

Adair, J. G. (1978). The human subject

- The social psychology of the psychological experiment. Boston: Little.

Dake, K. (1991). Orienting dispositions

in the perception of risk - An analysis of contemporary worldviews and

cultural biases. Journal of Cross-cultural Psychology, 22, 61-82.

Kahneman, D., Slovic, P., & Tversky,

A. (1982). Judgment under uncertainty: Heuristics and biases.

Cambridge:

University Press.

Rosnow, R. L., & Rosenthal, R. (1997).

People studying people: Artifacts and ethics in behavioral research. #:

Freeman & Company.

Rothman, A. J., Klein, W. M., &

Weinstein,

N. D. (1996). Absolute and relative biases in estimations of personal

risk.

Journal of Applied Social Psychology, 26, 1213-1236.

Weinstein, N. D., & Klein, W. M.

(1996).

Unrealistic optimism: Present and future. Social and Clinical

Psychology,

1, 1-2.

Yamagishi, K. (1994). Consistencies and

biases in risk perception: I. Anchoring processes and response-range

effect.

Perceptual and Motor Skills, 79, 651-656.

*

| Task | Timing |

>> CONCEPTUALIZATION

Thinking about an issue

Lit search & reading

Determining the issue to be investigated

Stating research questions

Theoretical framework & hypotheses

Methodology, design, mode of data coll.,

sampling

Availability of resources

Specified time planning

Ethical issues

>> DATA COLLECTION

Construction of response means, scales,

questionnaires

Place of data collection

Instruments, technical devices, exp. set-up

Getting participants/respondents

Running pretests

Revision of procedures & instruments

Main data collection

>> ANALYSIS OF DATA

Data coding

Checking for errors

Statistical data description

Item analyses

Testing hypotheses

>> WRITING THE RESEARCH REPORT

Substantive structure

Length of text

Text processing

Asking for reviews

Preparation of tables & graphs

List of references

Printing

Final formal checks

| End of GRM materials -- March 2003 B.R. |